I've been saying this for months, and a few others have recently jumped in. For those of us using HDTVs, this has been an issue for years. With the recent explosion of HDMI in PC monitors, it's becoming a larger issue. Nvidia's GPUs do not properly output full-range HDMI. But there is a fix. Run the below utility if you want it fixed. Read on if you want more of an explanation.

http://blog.metaclassofnil.com/wp-content/uploads/2012/08/NV_RGBFullRangeToggle.zip

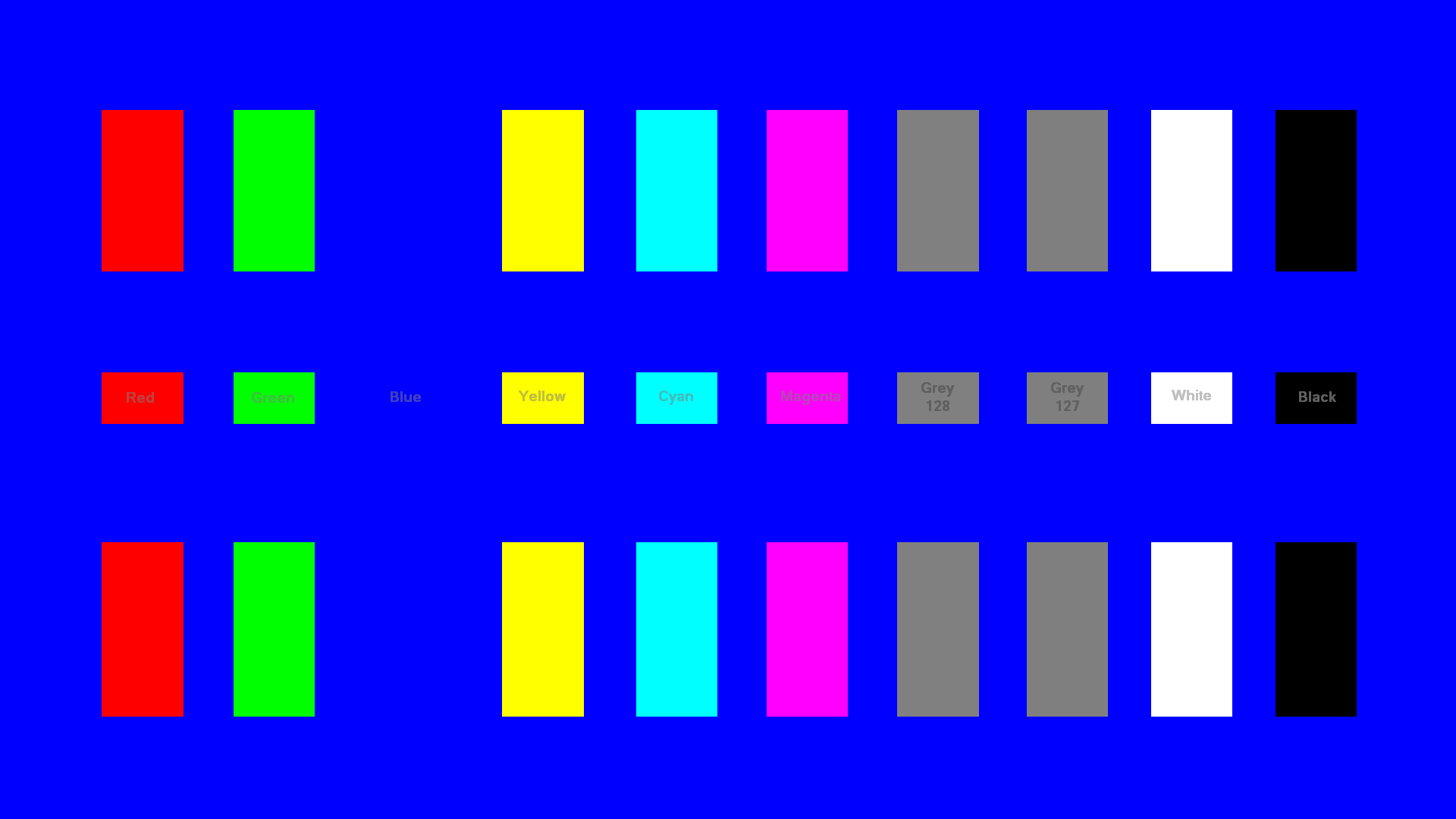

Basically, if you've ever used the menu in a modern HDTV, you've probably noticed a setting called "HDMI black levels" or just "black level." RGB sets a range of 256 (0-255) for black, when using 24-bit color. Most digital video content uses a range of 16-235. It is important for your connected device and your TV/monitor to be set at the same setting, or image quality will suffer. Unfortunately, there is no standard for the settings, so I'll explain the common ones below.

-Option for "full-range," or "high" is 0-255

-Option for "limited-range," or "low" is 16-235

Setting the input device (PS3, Xbox360, PC) to full-range and the TV/monitor to "limited-range" will lead to crushing blacks. The opposite will lead to a washed out image. If you have both set to full-range and play a DVD or Blu-Ray (Ie, PS3), this is fine as the PS3 and even your PC knows to properly map 16-235 content within a 0-255 range. Even your Nvidia GPU has this setting under video color settings. NOTE: This only affects video output when the desktop is at 0-255. Setting is useless if your desktop is 16-235.

So, when you plug in your GPU to a monitor/TV via HDMI, the driver looks up the display's EDID and tries to find out if it supports 0-255. If not or uncertain, it defaults to 16-235. Since monitors are coming out faster than AMD or Nvidia can keep pace, this means that a lot of displays cause the driver to default to 16-235, despite the display using 0-255. This means a washed out image. AMD has fixed this by giving us a toggle in their drivers. Nvidia has not and is ignoring the issue despite numerous threads on their own forums, and numerous bug submissions by myself and others.

So what can you do? After installing a driver, run the utility I linked at the top. You'll have to do this after every driver installation. Also, this forces 0-255 output, so your image will have crushing blacks if output to a limited range HDTV (so run the toggle again).

http://blog.metaclassofnil.com/wp-content/uploads/2012/08/NV_RGBFullRangeToggle.zip

Basically, if you've ever used the menu in a modern HDTV, you've probably noticed a setting called "HDMI black levels" or just "black level." RGB sets a range of 256 (0-255) for black, when using 24-bit color. Most digital video content uses a range of 16-235. It is important for your connected device and your TV/monitor to be set at the same setting, or image quality will suffer. Unfortunately, there is no standard for the settings, so I'll explain the common ones below.

-Option for "full-range," or "high" is 0-255

-Option for "limited-range," or "low" is 16-235

Setting the input device (PS3, Xbox360, PC) to full-range and the TV/monitor to "limited-range" will lead to crushing blacks. The opposite will lead to a washed out image. If you have both set to full-range and play a DVD or Blu-Ray (Ie, PS3), this is fine as the PS3 and even your PC knows to properly map 16-235 content within a 0-255 range. Even your Nvidia GPU has this setting under video color settings. NOTE: This only affects video output when the desktop is at 0-255. Setting is useless if your desktop is 16-235.

So, when you plug in your GPU to a monitor/TV via HDMI, the driver looks up the display's EDID and tries to find out if it supports 0-255. If not or uncertain, it defaults to 16-235. Since monitors are coming out faster than AMD or Nvidia can keep pace, this means that a lot of displays cause the driver to default to 16-235, despite the display using 0-255. This means a washed out image. AMD has fixed this by giving us a toggle in their drivers. Nvidia has not and is ignoring the issue despite numerous threads on their own forums, and numerous bug submissions by myself and others.

So what can you do? After installing a driver, run the utility I linked at the top. You'll have to do this after every driver installation. Also, this forces 0-255 output, so your image will have crushing blacks if output to a limited range HDTV (so run the toggle again).

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)