How to setup a ready to run OpenSolaris derived ZFS Storage Server

+configure it + add a WEB-GUI within minutes

more: napp-it mini HowTo 1 - setup a ZFS Server

How to setup a All-In-One Server -

VMware ESXi based + virtualized ZFS-SAN-Server + virtualized network Switch + other VM's like Windows, Linux..

more: napp-it mini HowTo 2 - setup a all-in one Server

with main focus on free systems

i will keep this initial post up to date, please re-read from time to time !!

ZFS is a revolutionary file-system with nearly unlimited capacity and superior data security thanks to copy on write, checksums with error-autofix, raid z1-3 without the raid5/6 write hole problem, with a online filecheck/ refresh feature and the capability to create nearly unlimited data snapshots without delay or initial space consumption. ZFS Boot snapshots are the way to go back to former OS-states. ZFS is stable, availabe since 2005 and used in Enterprise Storage Systems from Sun/ Oracle and Nexenta.

Features like Deduplication, online Encryption (from ZFS V.31), Triple Parity Raid and Hybrid Storage with SSD Read/ Write Cache Drives are State of the Art and just a included ZFS property. Volume- Raid and Storage Management are Part of any ZFS System and used with just two commands zfs and zpool.

ZFS is now part not only in Solaris derived Systems, but also in Free-BSD. There are efforts to include it in LInux ( ZoL). Even Apple (The days before they transformed from Apple Computers to the current iPod company) had planned to integrate ZFS but cancelled it just like they have done with their Xserve systems.

But Solaris derived Systems are more. ZFS is not just a file-system-add-on. Sun, inventor of ZFS, had developed a complete Enterprise Operating System with a unique integration around ZFS with other services like a Kernel-integrated AD Windows ACL compatible and fast SMB and a fast NFS Server as a ZFS property. Comstar, the included iSCSI Framework is fast and flexible, usable for complex SAN configurations. With Crossbow, the virtual Switch Framework you could build complex virtual network switches in software, Dtrace helps to analyse the system. Service-management is done the via the unique svcadm-tool. Virtualisation can be done on application level with Zones. All of these fetaures are from Sun- now Oracle, perfectly integrated into the os with the same handling and without compatibility problems between them - the main reason why i prefer ZFS on solaris derived systems-.

In 2010 Oracle bought SUN and closed the free OpenSolaris project:

There are now the following Operating Systems based on (the last free) OpenSolaris

1. commercial options

- Oracle Solaris 11 (available 2011)

- Oracle Solaris 11 Express (free for non-commercial and evaluation use) wth GUI and time-slider

download http://www.oracle.com/technetwork/server-storage/solaris11/downloads/index.html

the most advanced ZFS at the moment, the only one with encryption

(based on "OpenSolaris" Build 151). I support it with my free napp-it Web-Gui

download http://www.napp-it.org/downloads/index_en.html

Solaris Express 11 testinstallation http://www.napp-it.org/pop11.html

-NexentaStor Enterprise Storage-Appliance

stable, based on OpenSolaris Build 134 with a lot of backported fixes

storage-only-use with the Nexenta Web-Gui

download trial at http://www.nexenta.com

-based on NexentaStor, there is a free Nexentastor Community-Edition

(=NexentaStor, with the same GUI but without Service, without some add-ons, limited to 18 TB RAW capacity and storage-only use)

NOT allowed for production use - home use only -

download at http://www.nexentastor.org

Compare different Nexenta Versions at:

http://www.nexenta.com/corp/nexentastor-overview/nexentastor-versions

2. free options

best suited for a napp-it system:

-OmniOS a minimal server distribution mainly for NAS and SAN usage, stable release with weekly betas

free with optional commercial support, very close and up to date with Illumos kernel development

download http://omnios.omniti.com/

-OpenIndiana (the free successor of OpenSolaris), based on OpenSolaris Build 151 with GUI and time-slider

currently beta, usable for Desktop or Server use.

download http://openindiana.org/download/

other options:

-NexentaCore 3, end of live

was based on a OpenSolaris Kernel Build 134 with a lot of backportet fixes from newer builds. CLI-only (without the NexentaStor Web-Gui) but running with my free napp-it Web-Gui up to napp-it 0.8

-Illumian, base of NexentaStor 4 is available.

http://www.illumian.org

Illumian is based on Illumos (like OpenIndiana and uses the same binaries) but with Debian-like software installation and packaging

-other distributions like Bellenix, Eon or Schillix

All of the above are based originally on OpenSolaris. Further development is done either by Oracle.

It was told, that they will freely publish the results after final releases If so, we may see new Oracle-features also in the free options with a delay.

On the other side, there is the Illumos Project. It is a free community driven fork of the OpenSolaris Kernel with the Base-OS-functions and some base tools. They will be as close as possible to Oracle Solaris, use their new features, when they become available but also do their own development and offer Oracle to eventually include them. Illumos is mainly financed by Nexenta and others with a focus on free ZFS based Systems. Illumos is already base for Schillix and will become the base of next Eon, Bellenix, Nexenta* and OpenIndiana. Illumos is intended to be completely free and open source.

3. Use cases:

Although there is a desktop-option with OpenIndiana, Solaris was developed by Sun to be a Enterprise Server OS with stability and performance at first place, best for:

NAS

-Kernel based high speed Windows Cifs/SMB Fileserver (AD, ACL kompatible, Snaps via previous version )

-opt. also via Samba

-Apple File-Server with TimeMaschine and ACL-support via netatalk

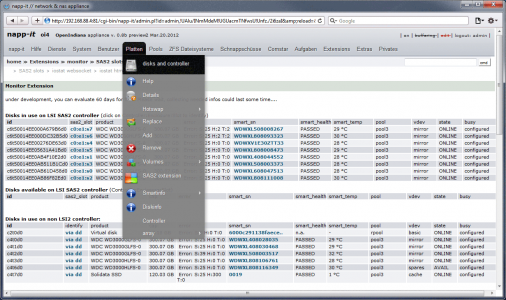

SAN

-NFS and iSCSI Storage for ESXi and XEN

Webserver

-AMP Stack (Apache, mySQL, PHP)

Backup and Archiv

-Snapshots , Online File-Checks with Data Refresh , Checksum

Software based Virtualization via Zones

and of course Enterprise Databases

-one of the reason, Oracle bought Sun

Appliances

-All OpenSolaris derived systems could be used as appliances and managed remotely via SSH or Browser and Web-Gui (Nexenta/ Nexentastor or napp-it on the others).

3.1 All in One Solutions

You could run OpenSolaris based systems not only on real hardware but also virtualized, best on (also free-version) ESXi with pci-passthrough to SAS Controller and disks. With ESXi 4.x you will have quite the same performance like you would have on real hardware - if you could add enough RAM to this VM. It is possible to integrate the three usual parts of a professional solution like Virtual-Server, virtualized NAS/SAN-Server and virtualized LAN/SAN/vlan-network-switch build on ESXi features in one box.

If you need hot-snaps of your VM's

-create snaps within ESXi, then with ZFS; delete ESXi snap

In case of problems, restore ZFS snap. You could then restore hot-snap within ESXi.

If you need higher availabilty, use two all-in one-boxes and replicate your vm's from the first to the second box via smb-copy from snaps or ZFS-send

Links about all-in-one:

http://wiki.xensource.com/xenwiki/VTdHowTo

http://www.servethehome.com/configure-passthrough-vmdirectpath-vmware-esxi-raid-hba-usb-drive/

http://www.napp-it.org/napp-it/all-in-one/index_en.html

3.2 short how for my All-In-One concept:

-use a mainboard with Intel 3420 or 5520 chipset + one or two Xeons

-you need a second Disk-Controller, best are LSI 1068 or 2008 based SAS/SATA in IT-mode

(flash it to IT-mode, if you have a IR/Raid flashed one)

-Install esxi (onto a >= 32 GB boot drive, connected to sata in ahci mode)

-activate vti-d in mainboard bios

-activate pci-passthrough for this SAS controller in esxi, reboot

-use the remaining space of your boot drive as a local datastor, install a virtualized Nexenta/Solaris on this local datastore; add/ pass-through your SAS Controller to this VM

-connect all other disks to your SAS/Sata Controller. Nexenta/ Solaris will have real access to these disks due to pass-through.

-set this VM to autostart first and install vmware-tools

-create a pool, share it via NFS and CIFS for easy clone/move/save/backup/snap access from Windows or from console/midnight commander.

-import this NFS-Share as a NFS datastore within ESXi and store all other VMs like any Windows, Linux, Solaris or BSD on it

-share it also via CIFS for easy cloning/ backup of a VM's from your ZFS folder or via Windows previous version.

4. Hardware:

Opensolaris derived Systems may run on a lot of current systems. But often its not a trouble-free experience. But there are a few always trouble-free options, mostly based on current Intel Server Chipsets, Intel Nics and LSI 1068 (ex Intel SASUC8I) or LSI 2008 based SAS/ Sata HBA (JBOD) Controller. Avoid Hardware-Raid at all and 4k drives if possible (They will work but may have lower performance, TLER disks are not needed).

See

http://www.sun.com/bigadmin/hcl/

http://nexenta.org/projects/site/wiki/About_suggested_NAS_SAN_Hardware

Cheap and low power Intel Atom or the new HP Proliant Micro Server N36 (4 HD bays, max 8 GB ECC Ram, pcie 16x und 1x slots) with 1 GB+ RAM are ok but are running with reduced performance. But you should not use some goodies like deduplication, ZFS Z1-3 or encryption or it will become really slow. But it is suggested to add as much RAM as possible.

If possible, I would always prefer server-class hardware best are the X8 series from SuperMicro with 3420 or 5520 chipset like a micro-ATX Supermicro X8-SIL-F. It is relatively cheap with i3 CPU, fast but low power with L-Class Xeons (40W Quads), can hold up to 32 GB ECC Ram with fast Intel Nics and 3 x pci-e 8x slots and ipmi (browser based remote keyboard and console access with remote on/off/reset - even when ih power-off state). It also supports vti-d, so you may use it as a ESXi virtual server with pci-passthrough to a embedded, virtualized Nexenta or Solaris-based zfs storage server.

read about that mainboard http://www.servethehome.com/supermicro-x8silf-motherboard-v102-review-whs-v2-diy-server/

minimum is 4 GB Bootdrive + 1 GB RAM + 32 Bit CPU (Nexenta)

best is 64 Bit Dual/ QuadCore, 8 GB ECC Ram+ (all used for caching)

If you want to use deduplication, you need 2-3 GB Ram per TB Data

Usually i would say: Trouble free =

- X8 Series from SuperMicro with 3420 or 5520 Intel server-chipsets (up from 150Euro),

- X-Class or L-Class low power Xeons (Dual, better Quads)

- Onboard SATA with Intel ICH10 (ahci)

- use always Intel Nics

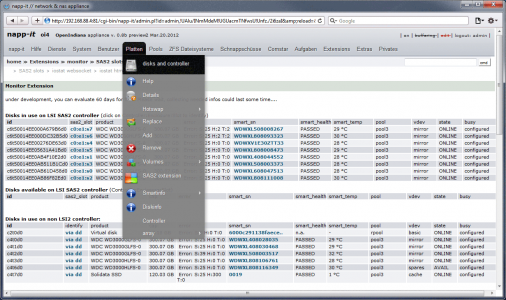

- LSI Controller based on 1068e (always the best) or SAS2 LSI 2008 with IT firmware

about onboard LSI1068 or LSI 2008 controller

they are per default flashed in Raid-mode,

think about reflash to IT mode (native SAS without Raid functions)

see http://www.servethehome.com/flashing-intel-sasuc8i-lsi-firmware-guide/

- Avoid expander when not needed (you need it for external enclosures or if you have more disks than you can supply with additional controller) and hardware raid at all for datapools, avoid other controller or use only if others have reported success

Use mainboards with enough 4x or 8x slots for needed NICs (1Gb now /10Gb next) and needed Storage Controller.

If you follow these simple rules, you will have fun.

I would avoid SAS2 Controller ex LSI 2008 when not needed because of the WWN problem.

(LSI 2008 will report disk related WWN instead of controller based ID)

read http://www.nexenta.org/boards/1/topics/823

4b. some general suggestions:

-keep it as simple as possible

-performance improvements below 20% are not worth to think about more than a moment

-if you have the choice, avoid parts that may have problems (ex. expander, 4k disks,hardware-raid, realtek nic etc.)

-do not buy old hardware

-do not buy very new hardware

-do not buy it too small

-do not buy to satisfy "forever needs", think only up to 2 years but think about growing data, backups or if you need virtualization

follwow the best to use suggestions whenever possible

(with ZFS there are not too many)

or if i should say it in german:

"Geiz ist geil" ist genauso blöd wie

"Mann gönnt sich ja sonst nichts - wobei ich schon weiß, wie man Mann schreibt"

5. ZFS Server-OS Installation (Nexenta.*, Solaris Express 11 or OpenIndiana with suggested easy to use Live edition or with text-installer)

NexentaCore is CLI only. With OpenIndiana or Solaris Express i would prefer the GUI-Live version because they are much more user friendly and you will get the time-slider feature

(You can select a folder and go back in time, really nice to have)

Download ISO, boot from it and install OS to a separate opt. mirrored boot-drive (use always ahci with onboard sata)

You will always use the whole boot disk, so installation is ultra easy. just answer questions about your time-zone and keyboard and preferred network settings

On Nexenta3 there is currently a bug with dhcp. After Installation with dhcp, you have enable auto-config via: svcadm enable nwam

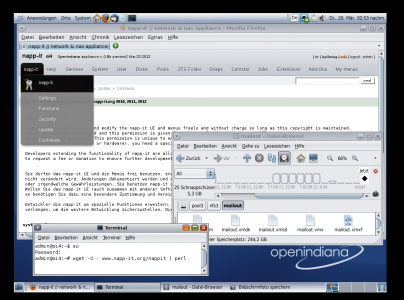

To configure a Ready ro Run ZFS-Server with all tools and extras like Web-Gui AMP and AFP, you could use my online installer script

6. First time configuration or update your NAS with the napp-it web-gui, toolss and additional NAS/www-services

Install NexentaCore, OpenIndiana or Solaris Express 11, reboot and

login as root (or as user, enter su for root access from your home-directory) and enter:

wget -O - www.napp-it.org/nappit | perl

The nappit online installer will configure your os (user, permissions and smb-settings), add a web-gui + minihttpd, add/ compile tools like netcat, mc, iperf, bonnie and smartmontools

Thats all, your NAS is ready to run.

Manage it via browser http://ip:81

You could setup most things via web-gui, some like acl-settings

or snapshot access via previous version are more comfortable within Windows.

7. add-ons:

On top of a running napp-it installation you could add AMP webserver stack via:

wget -O - www.napp-it.org/amp | perl

The amp online-installer will setup Apache, mySQL and PHP

or add Apple AFP+Time Machine support via:

wget -O - www.napp-it.org/afp | perl

The afp installer will compile and configure a netatalk AFP Server

with Time-Machine support. Share settings are included in the napp-it

web-gui in menue folders.

8.Some useful Links about ZFS:

excellent summary on practical tipps about ZFS

http://www.nex7.com/readme1st

newest docs from Oracle

http://docs.oracle.com/cd/E23824_01/ Manuals from Oracle Solaris 11

http://www.c0t0d0s0.org/archives/6639-Recommended-SolarisSun-Books.html (recommended books)

http://hub.opensolaris.org/bin/view/Main/documentation (overview about docs)

http://www.c0t0d0s0.org/pages/lksfbook.html (less known features)

http://dlc.sun.com/pdf/819-5461/819-5461.pdf (ZFS manual)

http://www.solarisinternals.com/ (best source of Howtos)

http://www.solarisinternals.com/wiki/index.php/ZFS_Best_Practices_Guide (best practice guide)

http://www.solarisinternals.com/wiki/index.php/ZFS_Evil_Tuning_Guide (best tuning guide)

http://www.oracle.com/us/products/servers-storage/storage/storage-software/031857.htm (oracle info)

http://hub.opensolaris.org/bin/view/Project+cifs-server/docs (cifs server)

http://nexenta.org/projects/site/wiki (Nexenta wiki)

http://www.zfsbuild.com/ (howto and performance tests)

ten-ways-easily-improve-oracle-solaris-zfs-filesystem-performance (10 ways to improve performance)

favorite-oracle-solaris-performance-analysis-commands (analysis tools)

http://download.oracle.com/docs/cd/E19963-01/index.html (Oracle Solaris 11 Express Information Library )

http://constantin.glez.de/blog/2011/03/how-set-zfs-root-pool-mirror-oracle-solaris-11-express (mirrored bootdrive on Solaris/OpenIndiana)

german ZFS manual (Handbuch)

http://dlc.sun.com/pdf/820-2313/820-2313.pdf (ZFS Handbuch)

about silent data corruption (schleichende Datenfehler)

(german, translate via google if needed)

http://www.solarisday.de/downloads/storageserver0609nau.pdf

http://www.fh-wedel.de/~si/seminare/ws08/Ausarbeitung/02.zfs/funktionen.html#n17

Help forums, wiki and IRC

http://echelog.matzon.dk/logs/browse/openindiana/

http://echelog.matzon.dk/logs/browse/nexenta/

http://echelog.matzon.dk/logs/browse/illumos

https://forums.oracle.com/forums/forum.jspa?forumID=1321

http://wiki.openindiana.org/oi/OpenIndiana+Wiki+Home

https://www.illumos.org/projects/site/boards

FAQ

Q What are the three biggest advantages of ZFS?

A1 Data security due to real data checksums, no raid-write hole problem, online scrubbing against silent corruption

A2 nearly unlimited datasnaps + systemsnaps and writable clones without initial space consumption due to copy on write

A3 easyness, it just works and is stable without special raid-controller or complex setup

Q Can I expand/ shrink a pool

A You cannot shrink (who wants?)

A A pool is build from one or more vdevs/ RaidZ

A You can expand a pool by adding more RaidZ/vdevs but you cannot expand a single Raidz of a pool

Q Is ZFS faster than other systems?

A ZFS was developped mainly to satisfy needs in datacenters with higest data security in mind

A With a single disk its mostly slower than other systems with much better data security

A It scales perfectly up to hundreds of disks in Petabyte ranges. Performance becomes better with any vdev you add to a pool.

Q On which platform can I use ZFS

A Newest features are from Oracle Solaris*, but you must pay for a support contract if you need patches or use it commercially

A Best free alternative is OpenIndiana or Nexentacore (dead see -> Illumos iCore project) or commercial NexentaStor

A On non-Solaris platforms you can use FreeBSD, I would not use current implementations on Linux

A Newest or all ZFS features are only available on Solaris based systems

Please read my miniHowTo's

napp-it mini HowTo 1 - setup a ZFS Server

napp-it mini HowTo 2 - setup a all-in one Server

Gea

+configure it + add a WEB-GUI within minutes

more: napp-it mini HowTo 1 - setup a ZFS Server

How to setup a All-In-One Server -

VMware ESXi based + virtualized ZFS-SAN-Server + virtualized network Switch + other VM's like Windows, Linux..

more: napp-it mini HowTo 2 - setup a all-in one Server

with main focus on free systems

i will keep this initial post up to date, please re-read from time to time !!

ZFS is a revolutionary file-system with nearly unlimited capacity and superior data security thanks to copy on write, checksums with error-autofix, raid z1-3 without the raid5/6 write hole problem, with a online filecheck/ refresh feature and the capability to create nearly unlimited data snapshots without delay or initial space consumption. ZFS Boot snapshots are the way to go back to former OS-states. ZFS is stable, availabe since 2005 and used in Enterprise Storage Systems from Sun/ Oracle and Nexenta.

Features like Deduplication, online Encryption (from ZFS V.31), Triple Parity Raid and Hybrid Storage with SSD Read/ Write Cache Drives are State of the Art and just a included ZFS property. Volume- Raid and Storage Management are Part of any ZFS System and used with just two commands zfs and zpool.

ZFS is now part not only in Solaris derived Systems, but also in Free-BSD. There are efforts to include it in LInux ( ZoL). Even Apple (The days before they transformed from Apple Computers to the current iPod company) had planned to integrate ZFS but cancelled it just like they have done with their Xserve systems.

But Solaris derived Systems are more. ZFS is not just a file-system-add-on. Sun, inventor of ZFS, had developed a complete Enterprise Operating System with a unique integration around ZFS with other services like a Kernel-integrated AD Windows ACL compatible and fast SMB and a fast NFS Server as a ZFS property. Comstar, the included iSCSI Framework is fast and flexible, usable for complex SAN configurations. With Crossbow, the virtual Switch Framework you could build complex virtual network switches in software, Dtrace helps to analyse the system. Service-management is done the via the unique svcadm-tool. Virtualisation can be done on application level with Zones. All of these fetaures are from Sun- now Oracle, perfectly integrated into the os with the same handling and without compatibility problems between them - the main reason why i prefer ZFS on solaris derived systems-.

In 2010 Oracle bought SUN and closed the free OpenSolaris project:

There are now the following Operating Systems based on (the last free) OpenSolaris

1. commercial options

- Oracle Solaris 11 (available 2011)

- Oracle Solaris 11 Express (free for non-commercial and evaluation use) wth GUI and time-slider

download http://www.oracle.com/technetwork/server-storage/solaris11/downloads/index.html

the most advanced ZFS at the moment, the only one with encryption

(based on "OpenSolaris" Build 151). I support it with my free napp-it Web-Gui

download http://www.napp-it.org/downloads/index_en.html

Solaris Express 11 testinstallation http://www.napp-it.org/pop11.html

-NexentaStor Enterprise Storage-Appliance

stable, based on OpenSolaris Build 134 with a lot of backported fixes

storage-only-use with the Nexenta Web-Gui

download trial at http://www.nexenta.com

-based on NexentaStor, there is a free Nexentastor Community-Edition

(=NexentaStor, with the same GUI but without Service, without some add-ons, limited to 18 TB RAW capacity and storage-only use)

NOT allowed for production use - home use only -

download at http://www.nexentastor.org

Compare different Nexenta Versions at:

http://www.nexenta.com/corp/nexentastor-overview/nexentastor-versions

2. free options

best suited for a napp-it system:

-OmniOS a minimal server distribution mainly for NAS and SAN usage, stable release with weekly betas

free with optional commercial support, very close and up to date with Illumos kernel development

download http://omnios.omniti.com/

-OpenIndiana (the free successor of OpenSolaris), based on OpenSolaris Build 151 with GUI and time-slider

currently beta, usable for Desktop or Server use.

download http://openindiana.org/download/

other options:

-NexentaCore 3, end of live

was based on a OpenSolaris Kernel Build 134 with a lot of backportet fixes from newer builds. CLI-only (without the NexentaStor Web-Gui) but running with my free napp-it Web-Gui up to napp-it 0.8

-Illumian, base of NexentaStor 4 is available.

http://www.illumian.org

Illumian is based on Illumos (like OpenIndiana and uses the same binaries) but with Debian-like software installation and packaging

-other distributions like Bellenix, Eon or Schillix

All of the above are based originally on OpenSolaris. Further development is done either by Oracle.

It was told, that they will freely publish the results after final releases If so, we may see new Oracle-features also in the free options with a delay.

On the other side, there is the Illumos Project. It is a free community driven fork of the OpenSolaris Kernel with the Base-OS-functions and some base tools. They will be as close as possible to Oracle Solaris, use their new features, when they become available but also do their own development and offer Oracle to eventually include them. Illumos is mainly financed by Nexenta and others with a focus on free ZFS based Systems. Illumos is already base for Schillix and will become the base of next Eon, Bellenix, Nexenta* and OpenIndiana. Illumos is intended to be completely free and open source.

3. Use cases:

Although there is a desktop-option with OpenIndiana, Solaris was developed by Sun to be a Enterprise Server OS with stability and performance at first place, best for:

NAS

-Kernel based high speed Windows Cifs/SMB Fileserver (AD, ACL kompatible, Snaps via previous version )

-opt. also via Samba

-Apple File-Server with TimeMaschine and ACL-support via netatalk

SAN

-NFS and iSCSI Storage for ESXi and XEN

Webserver

-AMP Stack (Apache, mySQL, PHP)

Backup and Archiv

-Snapshots , Online File-Checks with Data Refresh , Checksum

Software based Virtualization via Zones

and of course Enterprise Databases

-one of the reason, Oracle bought Sun

Appliances

-All OpenSolaris derived systems could be used as appliances and managed remotely via SSH or Browser and Web-Gui (Nexenta/ Nexentastor or napp-it on the others).

3.1 All in One Solutions

You could run OpenSolaris based systems not only on real hardware but also virtualized, best on (also free-version) ESXi with pci-passthrough to SAS Controller and disks. With ESXi 4.x you will have quite the same performance like you would have on real hardware - if you could add enough RAM to this VM. It is possible to integrate the three usual parts of a professional solution like Virtual-Server, virtualized NAS/SAN-Server and virtualized LAN/SAN/vlan-network-switch build on ESXi features in one box.

If you need hot-snaps of your VM's

-create snaps within ESXi, then with ZFS; delete ESXi snap

In case of problems, restore ZFS snap. You could then restore hot-snap within ESXi.

If you need higher availabilty, use two all-in one-boxes and replicate your vm's from the first to the second box via smb-copy from snaps or ZFS-send

Links about all-in-one:

http://wiki.xensource.com/xenwiki/VTdHowTo

http://www.servethehome.com/configure-passthrough-vmdirectpath-vmware-esxi-raid-hba-usb-drive/

http://www.napp-it.org/napp-it/all-in-one/index_en.html

3.2 short how for my All-In-One concept:

-use a mainboard with Intel 3420 or 5520 chipset + one or two Xeons

-you need a second Disk-Controller, best are LSI 1068 or 2008 based SAS/SATA in IT-mode

(flash it to IT-mode, if you have a IR/Raid flashed one)

-Install esxi (onto a >= 32 GB boot drive, connected to sata in ahci mode)

-activate vti-d in mainboard bios

-activate pci-passthrough for this SAS controller in esxi, reboot

-use the remaining space of your boot drive as a local datastor, install a virtualized Nexenta/Solaris on this local datastore; add/ pass-through your SAS Controller to this VM

-connect all other disks to your SAS/Sata Controller. Nexenta/ Solaris will have real access to these disks due to pass-through.

-set this VM to autostart first and install vmware-tools

-create a pool, share it via NFS and CIFS for easy clone/move/save/backup/snap access from Windows or from console/midnight commander.

-import this NFS-Share as a NFS datastore within ESXi and store all other VMs like any Windows, Linux, Solaris or BSD on it

-share it also via CIFS for easy cloning/ backup of a VM's from your ZFS folder or via Windows previous version.

4. Hardware:

Opensolaris derived Systems may run on a lot of current systems. But often its not a trouble-free experience. But there are a few always trouble-free options, mostly based on current Intel Server Chipsets, Intel Nics and LSI 1068 (ex Intel SASUC8I) or LSI 2008 based SAS/ Sata HBA (JBOD) Controller. Avoid Hardware-Raid at all and 4k drives if possible (They will work but may have lower performance, TLER disks are not needed).

See

http://www.sun.com/bigadmin/hcl/

http://nexenta.org/projects/site/wiki/About_suggested_NAS_SAN_Hardware

Cheap and low power Intel Atom or the new HP Proliant Micro Server N36 (4 HD bays, max 8 GB ECC Ram, pcie 16x und 1x slots) with 1 GB+ RAM are ok but are running with reduced performance. But you should not use some goodies like deduplication, ZFS Z1-3 or encryption or it will become really slow. But it is suggested to add as much RAM as possible.

If possible, I would always prefer server-class hardware best are the X8 series from SuperMicro with 3420 or 5520 chipset like a micro-ATX Supermicro X8-SIL-F. It is relatively cheap with i3 CPU, fast but low power with L-Class Xeons (40W Quads), can hold up to 32 GB ECC Ram with fast Intel Nics and 3 x pci-e 8x slots and ipmi (browser based remote keyboard and console access with remote on/off/reset - even when ih power-off state). It also supports vti-d, so you may use it as a ESXi virtual server with pci-passthrough to a embedded, virtualized Nexenta or Solaris-based zfs storage server.

read about that mainboard http://www.servethehome.com/supermicro-x8silf-motherboard-v102-review-whs-v2-diy-server/

minimum is 4 GB Bootdrive + 1 GB RAM + 32 Bit CPU (Nexenta)

best is 64 Bit Dual/ QuadCore, 8 GB ECC Ram+ (all used for caching)

If you want to use deduplication, you need 2-3 GB Ram per TB Data

Usually i would say: Trouble free =

- X8 Series from SuperMicro with 3420 or 5520 Intel server-chipsets (up from 150Euro),

- X-Class or L-Class low power Xeons (Dual, better Quads)

- Onboard SATA with Intel ICH10 (ahci)

- use always Intel Nics

- LSI Controller based on 1068e (always the best) or SAS2 LSI 2008 with IT firmware

about onboard LSI1068 or LSI 2008 controller

they are per default flashed in Raid-mode,

think about reflash to IT mode (native SAS without Raid functions)

see http://www.servethehome.com/flashing-intel-sasuc8i-lsi-firmware-guide/

- Avoid expander when not needed (you need it for external enclosures or if you have more disks than you can supply with additional controller) and hardware raid at all for datapools, avoid other controller or use only if others have reported success

Use mainboards with enough 4x or 8x slots for needed NICs (1Gb now /10Gb next) and needed Storage Controller.

If you follow these simple rules, you will have fun.

I would avoid SAS2 Controller ex LSI 2008 when not needed because of the WWN problem.

(LSI 2008 will report disk related WWN instead of controller based ID)

read http://www.nexenta.org/boards/1/topics/823

4b. some general suggestions:

-keep it as simple as possible

-performance improvements below 20% are not worth to think about more than a moment

-if you have the choice, avoid parts that may have problems (ex. expander, 4k disks,hardware-raid, realtek nic etc.)

-do not buy old hardware

-do not buy very new hardware

-do not buy it too small

-do not buy to satisfy "forever needs", think only up to 2 years but think about growing data, backups or if you need virtualization

follwow the best to use suggestions whenever possible

(with ZFS there are not too many)

or if i should say it in german:

"Geiz ist geil" ist genauso blöd wie

"Mann gönnt sich ja sonst nichts - wobei ich schon weiß, wie man Mann schreibt"

5. ZFS Server-OS Installation (Nexenta.*, Solaris Express 11 or OpenIndiana with suggested easy to use Live edition or with text-installer)

NexentaCore is CLI only. With OpenIndiana or Solaris Express i would prefer the GUI-Live version because they are much more user friendly and you will get the time-slider feature

(You can select a folder and go back in time, really nice to have)

Download ISO, boot from it and install OS to a separate opt. mirrored boot-drive (use always ahci with onboard sata)

You will always use the whole boot disk, so installation is ultra easy. just answer questions about your time-zone and keyboard and preferred network settings

On Nexenta3 there is currently a bug with dhcp. After Installation with dhcp, you have enable auto-config via: svcadm enable nwam

To configure a Ready ro Run ZFS-Server with all tools and extras like Web-Gui AMP and AFP, you could use my online installer script

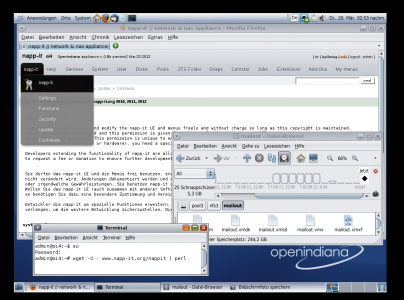

6. First time configuration or update your NAS with the napp-it web-gui, toolss and additional NAS/www-services

Install NexentaCore, OpenIndiana or Solaris Express 11, reboot and

login as root (or as user, enter su for root access from your home-directory) and enter:

wget -O - www.napp-it.org/nappit | perl

The nappit online installer will configure your os (user, permissions and smb-settings), add a web-gui + minihttpd, add/ compile tools like netcat, mc, iperf, bonnie and smartmontools

Thats all, your NAS is ready to run.

Manage it via browser http://ip:81

You could setup most things via web-gui, some like acl-settings

or snapshot access via previous version are more comfortable within Windows.

7. add-ons:

On top of a running napp-it installation you could add AMP webserver stack via:

wget -O - www.napp-it.org/amp | perl

The amp online-installer will setup Apache, mySQL and PHP

or add Apple AFP+Time Machine support via:

wget -O - www.napp-it.org/afp | perl

The afp installer will compile and configure a netatalk AFP Server

with Time-Machine support. Share settings are included in the napp-it

web-gui in menue folders.

8.Some useful Links about ZFS:

excellent summary on practical tipps about ZFS

http://www.nex7.com/readme1st

newest docs from Oracle

http://docs.oracle.com/cd/E23824_01/ Manuals from Oracle Solaris 11

http://www.c0t0d0s0.org/archives/6639-Recommended-SolarisSun-Books.html (recommended books)

http://hub.opensolaris.org/bin/view/Main/documentation (overview about docs)

http://www.c0t0d0s0.org/pages/lksfbook.html (less known features)

http://dlc.sun.com/pdf/819-5461/819-5461.pdf (ZFS manual)

http://www.solarisinternals.com/ (best source of Howtos)

http://www.solarisinternals.com/wiki/index.php/ZFS_Best_Practices_Guide (best practice guide)

http://www.solarisinternals.com/wiki/index.php/ZFS_Evil_Tuning_Guide (best tuning guide)

http://www.oracle.com/us/products/servers-storage/storage/storage-software/031857.htm (oracle info)

http://hub.opensolaris.org/bin/view/Project+cifs-server/docs (cifs server)

http://nexenta.org/projects/site/wiki (Nexenta wiki)

http://www.zfsbuild.com/ (howto and performance tests)

ten-ways-easily-improve-oracle-solaris-zfs-filesystem-performance (10 ways to improve performance)

favorite-oracle-solaris-performance-analysis-commands (analysis tools)

http://download.oracle.com/docs/cd/E19963-01/index.html (Oracle Solaris 11 Express Information Library )

http://constantin.glez.de/blog/2011/03/how-set-zfs-root-pool-mirror-oracle-solaris-11-express (mirrored bootdrive on Solaris/OpenIndiana)

german ZFS manual (Handbuch)

http://dlc.sun.com/pdf/820-2313/820-2313.pdf (ZFS Handbuch)

about silent data corruption (schleichende Datenfehler)

(german, translate via google if needed)

http://www.solarisday.de/downloads/storageserver0609nau.pdf

http://www.fh-wedel.de/~si/seminare/ws08/Ausarbeitung/02.zfs/funktionen.html#n17

Help forums, wiki and IRC

http://echelog.matzon.dk/logs/browse/openindiana/

http://echelog.matzon.dk/logs/browse/nexenta/

http://echelog.matzon.dk/logs/browse/illumos

https://forums.oracle.com/forums/forum.jspa?forumID=1321

http://wiki.openindiana.org/oi/OpenIndiana+Wiki+Home

https://www.illumos.org/projects/site/boards

FAQ

Q What are the three biggest advantages of ZFS?

A1 Data security due to real data checksums, no raid-write hole problem, online scrubbing against silent corruption

A2 nearly unlimited datasnaps + systemsnaps and writable clones without initial space consumption due to copy on write

A3 easyness, it just works and is stable without special raid-controller or complex setup

Q Can I expand/ shrink a pool

A You cannot shrink (who wants?)

A A pool is build from one or more vdevs/ RaidZ

A You can expand a pool by adding more RaidZ/vdevs but you cannot expand a single Raidz of a pool

Q Is ZFS faster than other systems?

A ZFS was developped mainly to satisfy needs in datacenters with higest data security in mind

A With a single disk its mostly slower than other systems with much better data security

A It scales perfectly up to hundreds of disks in Petabyte ranges. Performance becomes better with any vdev you add to a pool.

Q On which platform can I use ZFS

A Newest features are from Oracle Solaris*, but you must pay for a support contract if you need patches or use it commercially

A Best free alternative is OpenIndiana or Nexentacore (dead see -> Illumos iCore project) or commercial NexentaStor

A On non-Solaris platforms you can use FreeBSD, I would not use current implementations on Linux

A Newest or all ZFS features are only available on Solaris based systems

Please read my miniHowTo's

napp-it mini HowTo 1 - setup a ZFS Server

napp-it mini HowTo 2 - setup a all-in one Server

Gea

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)