How are you running you single hex system with 1 or more GPU clients? Just letting them duke it out for CPU cycles costs me 15K on a P2685, so that is no good. smp 11 with affinity set to cores 0-10 and the GPU set to core 11 is the best I have come up with, but it still is costing me 10K on the bigadv. Just smp 11 with no GPU is 3500 ppd faster than smp 11 with the GPU client. I would think that by isolating them with WinAFC would mean that the GPU client should not affect the SMP client, but it definately still does. I have been screwing with this all day, and I still don't have a clue on how to make this work better. Any help is much appreciated.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Single Hex + GPU folders - I need your help

- Thread starter musky

- Start date

On my box with a 980X and GTX 285, I just let them duke it out. I haven't done any testing on SMP only, etc.

Since my GPU boxen are down, today I added two of my GTX 295s to the SR-2. I'm running -smp 23 on the bigadv client, and have manually assigned the four GPU processes to thread 0 and the SMP process to all the others. The PPD of the bigadv unit doesn't seem to have been affected, although I've currently got a P2684 so it might just be too low to tell. (It should be 4% slower, and it probably is.) The GPUs are running well.

Since my GPU boxen are down, today I added two of my GTX 295s to the SR-2. I'm running -smp 23 on the bigadv client, and have manually assigned the four GPU processes to thread 0 and the SMP process to all the others. The PPD of the bigadv unit doesn't seem to have been affected, although I've currently got a P2684 so it might just be too low to tell. (It should be 4% slower, and it probably is.) The GPUs are running well.

I hope you have a 200-series card or more recent. Reduce your CPU utilization for the GPU down to 80% or possibly a little lower. You will lose about ~2000 PPD on the card but retain a lot of the SMP client's performance. I cannot confirm this setup without a hex-core. This is a tip recently posted in another thread so YMMV.

He's running Fermi, been chatting with him all night on IRC.. Fermi is a CPU whore.I hope you have a 200-series card or more recent.

Yep, but if he can strike the proper balance, it might be worth it. It's not going to be easy and may require a combination of core dedication and lower CPU utilization, just a wild guess. Wish I had this kind of hardware dilemma, LOL..He's running Fermi, been chatting with him all night on IRC.. Fermi is a CPU whore.

yeah, he's a bastid.. wish I had that config to test for him.Yep, but if he can strike the proper balance, it might be worth it. It's not going to be easy and may require a combination of core dedication and lower CPU utilization, just a wild guess. Wish I had this kind of hardware dilemma, LOL..

luv ya musky one.

Yeah, I actually think that is a good bit of the problem. At the risk of sounding like an elitist prick, I think a lot of the problem is that this machine is so freaking fast that a percentage CPU loss to the GPU client is amplified in a big way. That is why i am hoping Dookey or TJ can chime in with what they are doing with their equally fast 980s + GPUs.

sirmonkey1985

[H]ard|DCer of the Month - July 2010

- Joined

- Sep 13, 2008

- Messages

- 22,414

Yep, but if he can strike the proper balance, it might be worth it. It's not going to be easy and may require a combination of core dedication and lower CPU utilization, just a wild guess. Wish I had this kind of hardware dilemma, LOL..

problem is fermi does not respond well to cpu usage being limited in the client.. it goes ape shit..

only thing i can think of is instead of limiting the gpu client to a hyperthreaded core try setting the gpu client to core 0 and see if it makes a difference ppd wise.. that or dont limit it to a specific core and then windows will bounce it around from thread to thread depending on which ones loaded more then the other..

other thing is, make sure you have your gpu client set to run at idle not above idle.. try setting the corea3.exe to above idle or normal and see if theres a ppd change between the clients.. easier testing it this way because if it doesnt make a difference it will just reset the priority setting on the next WU..

Relevant thread where I started a subtopic on this theme, post #64 is my source: http://hardforum.com/showthread.php?t=1548531

Forgot my specs:

I7 970 @ 4.36

GTX 460 @ 1780 shaders

smp client: -smp x -bigadv -forceasm

gpu client: -advmethods

The x in the smp cllient is either 11 or removed (smp 12)

Sorry, I might be out of the loop but gpu client: -advmethods?

Is there a bonus for GPU?

No.Is there a bonus for GPU?

sirmonkey1985

[H]ard|DCer of the Month - July 2010

- Joined

- Sep 13, 2008

- Messages

- 22,414

Sorry, I might be out of the loop but gpu client: -advmethods?

Is there a bonus for GPU?

-advmethods flag is just so the client can run the open beta WU's..

tjmagneto

[H]ard DCOTM x2

- Joined

- Aug 6, 2008

- Messages

- 3,295

After all of this time I'm still trying to figure out the magic combination and had a little time to look at it this morning.

Right now I have my 980x running at 4.3 GHz and my 2 GTX 465s set to 1330 MHz for the shaders. Right now I'm folding a 2686 with the CPU and the GPUs are working on a 11225 and 11234. If I fold SMP only I was around 65k. If I fold with everything then both GPUs are getting 12k ppd each but the bigadv takes about a 10k hit for me. I'm netting more overall but I don't see that as being efficient at all.

Since I still have a few hours left with folding the the 2686 I'll try backing off the CPU utilization setting and see what happens overnight.

@CamaroZ06: setting the -advmethods flag for Fermi will not get you a bonus but will get you WUs besides the 611 pointers. I saw my ppd go up with these newer WUs

Right now I have my 980x running at 4.3 GHz and my 2 GTX 465s set to 1330 MHz for the shaders. Right now I'm folding a 2686 with the CPU and the GPUs are working on a 11225 and 11234. If I fold SMP only I was around 65k. If I fold with everything then both GPUs are getting 12k ppd each but the bigadv takes about a 10k hit for me. I'm netting more overall but I don't see that as being efficient at all.

Since I still have a few hours left with folding the the 2686 I'll try backing off the CPU utilization setting and see what happens overnight.

@CamaroZ06: setting the -advmethods flag for Fermi will not get you a bonus but will get you WUs besides the 611 pointers. I saw my ppd go up with these newer WUs

Just want to add a little something here, i'm not running -bigadv as i "only" have an x6 but 4 GPU2 clients allowed me to get ~10.8-11.2k PPD with 0% CPU usage on task manager.

I'm currently running a 460 and my 275/250 combo card so 1 GPU3 and 2 GPU2 clients. Max PPD i've seen is 9.5k so thats 1.3-1.7k loss on a less powerful chip on normal a3. Watching task manager showed that the core15.exe was using 2-4% of the CPU but it wouldn't stay constant, it was all over the place. -smp 5 held my PPD steady at 9.5k so i don't see a way out for any of us at the moment.

I remember that GPU2 had the same problem last year until nvidia updated the drivers so that may be the problem

I'm currently running a 460 and my 275/250 combo card so 1 GPU3 and 2 GPU2 clients. Max PPD i've seen is 9.5k so thats 1.3-1.7k loss on a less powerful chip on normal a3. Watching task manager showed that the core15.exe was using 2-4% of the CPU but it wouldn't stay constant, it was all over the place. -smp 5 held my PPD steady at 9.5k so i don't see a way out for any of us at the moment.

I remember that GPU2 had the same problem last year until nvidia updated the drivers so that may be the problem

may i be worthy

[H]ard|DCer of the Month - December 2010

- Joined

- Aug 17, 2010

- Messages

- 1,186

On my box with a 980X and GTX 285, I just let them duke it out. I haven't done any testing on SMP only, etc.

Since my GPU boxen are down, today I added two of my GTX 295s to the SR-2. I'm running -smp 23 on the bigadv client, and have manually assigned the four GPU processes to thread 0 and the SMP process to all the others. The PPD of the bigadv unit doesn't seem to have been affected, although I've currently got a P2684 so it might just be too low to tell. (It should be 4% slower, and it probably is.) The GPUs are running well.

I just did a lot of testing on this, and found -smp 23 to be better than I thought. Losing only about 6K ppd - I thought wohoo, lets try 2 GTX-460s, ran out and bought another. (getting them to run reliably is a separate education

If I set -smp 23 or 22 then standard units FAIL! If I only pulled bigadv I would be fine, but this kills my method and will kill my 80% or better completion rate - how are you getting this to work?

If I set -smp 23 or 22 then standard units FAIL! If I only pulled bigadv I would be fine, but this kills my method and will kill my 80% or better completion rate - how are you getting this to work?

This is the second time I have heard this. The other was with a single L5640 that will not run -smp 11. My single hex has been running for 12 hours now at -smp 11, but that is a bigadv unit. Maybe we are on to something here.

may i be worthy

[H]ard|DCer of the Month - December 2010

- Joined

- Aug 17, 2010

- Messages

- 1,186

Post #11 here tipped me to it while googling...

http://forums.guru3d.com/showthread.php?p=3706375

Had quite the shitty weekend trying to get 2 GTX-460s working as well, testing with a captured bigadv - then finding trying it for real might all be for nothing if I can't do standards...

http://forums.guru3d.com/showthread.php?p=3706375

Had quite the shitty weekend trying to get 2 GTX-460s working as well, testing with a captured bigadv - then finding trying it for real might all be for nothing if I can't do standards...

I'm glad to hear your experiences in this thread. I've been looking at my SR2 box (dual quads with two GTX470s) with disgust lately on account of the low PPD numbers I've been getting from my CPUs.

Has anybody tested dual quads with SMP -15?

How many cores do you think two Fermi clients need? I'm thinking I'm going to try SMP -15 and let Windows 7 handle the thread distribution among the cores. It's supposed to be good at that.

Has anybody tested dual quads with SMP -15?

How many cores do you think two Fermi clients need? I'm thinking I'm going to try SMP -15 and let Windows 7 handle the thread distribution among the cores. It's supposed to be good at that.

DooKey

[H]F Junkie

- Joined

- Apr 25, 2001

- Messages

- 13,562

I'm still experimenting musky. Right now I have my gpu's set to 80% usage and I'm running -smp 11 on the cpu client. I'm currently crunching a 2684 and I'm getting 31:43 TPF for 33498 PPD. My 480s at 750/1500 are getting 12276 PPD each on the 10632 wu. Total PPD is 58050 PPD.

I'm going to keep tweaking to see if I can improve that.

I'm going to keep tweaking to see if I can improve that.

Last edited:

Your 480s should be doing better at 80%. I just backed my 460 down to 80% and it dropped from 14.3K to 12.3K ppd. This seems to have had no affect at all on the bigadv times, which I would actually expect since I have the GPU client isolated to one CPU core and the smp client isolated to the other 11 with -smp 11. What I don't understand at all is why shutting down the GPU client in this configuration has any affect on the SMP client. I think it has to do with actual versus virtual cores, as sirmonkey hinted earlier. Since the actual and virtual pair of CPUs is linked, I don't think you truely isolate one without some effect on the other.

Shutting down isolated GPU clients on my systems without HT also results in a very significant effect on the SMP client to its betterment. I believe it is more than CPU cycle cannibalizing that GPU clients have on the SMP client, there are other resources which no doubt both clients share and then released for full utilization by the SMP client. I think these shared resources probably involves the memory subsystem to some degree, and supersedes core-specific shared resources. This is just a pure guess on my part.What I don't understand at all is why shutting down the GPU client in this configuration has any affect on the SMP client. I think it has to do with actual versus virtual cores, as sirmonkey hinted earlier. Since the actual and virtual pair of CPUs is linked, I don't think you truely isolate one without some effect on the other.

If I set -smp 23 or 22 then standard units FAIL! If I only pulled bigadv I would be fine, but this kills my method and will kill my 80% or better completion rate - how are you getting this to work?

I saw the same thing, MIBW. I woke up this morning to 64 straight WU failures on the SR-2, until it finally pulled a P2684. I'll go back to just -smp and see how my frame times are affected.

tjmagneto

[H]ard DCOTM x2

- Joined

- Aug 6, 2008

- Messages

- 3,295

Just like DooKey I'm still experimenting but I ought to be able to do better.

I let my system run overnight with the 465s set to 75%. The only change I saw was lower ppd from the GPUs. The CPU use according to Task Manager was still running at 2% per GPU, sometimes hitting 3% momentarily. I put the 465s back to 100% and overall ppd went up by 4k and that included a small hit to SMP production.

Looks like it's time to try something else but I called in to work and scan brains so this will have to wait.

One last thought: somewhere out there an ATI owner is laughing.

I let my system run overnight with the 465s set to 75%. The only change I saw was lower ppd from the GPUs. The CPU use according to Task Manager was still running at 2% per GPU, sometimes hitting 3% momentarily. I put the 465s back to 100% and overall ppd went up by 4k and that included a small hit to SMP production.

Looks like it's time to try something else but I called in to work and scan brains so this will have to wait.

One last thought: somewhere out there an ATI owner is laughing.

I suggest trying to run smp (cores - 1) and let windows 7 handle affinity

I've done that as mentioned above on my SR2 running smp -15. CPU usage is right around 97-98% with two GTX470 clients running, and my last three TPF on a 2686 is 19:05 for 74k ppd. I don't think I've seen PPD that high since I installed these GPUs.

I would like to know if anybody else has similar results.

I've done that as mentioned above on my SR2 running smp -15. CPU usage is right around 97-98% with two GTX470 clients running, and my last three TPF on a 2686 is 19:05 for 74k ppd. I don't think I've seen PPD that high since I installed these GPUs.

I would like to know if anybody else has similar results.

tjmagneto

[H]ard DCOTM x2

- Joined

- Aug 6, 2008

- Messages

- 3,295

I just had a 2685 just start but had to go back to work again. I'll dial it back to -smp 11 and see what happens.

86 5.0L

Supreme [H]ardness

- Joined

- Nov 13, 2006

- Messages

- 7,085

I had better points WITHOUT the -advmethods on the GPU

may i be worthy

[H]ard|DCer of the Month - December 2010

- Joined

- Aug 17, 2010

- Messages

- 1,186

I saw the same thing, MIBW. I woke up this morning to 64 straight WU failures on the SR-2, until it finally pulled a P2684. I'll go back to just -smp and see how my frame times are affected.

Son of a bitch

(what is happening, not you

I will post my tests soon, but until that is fixed it looks like I have wasted a day and a GTX460. Well, Far Cry 2 is happy, but not FAH.

Well, I am no further now than I was two days ago. Every combination of smp number and core priority produced unacceptable results. That, along with the reports of -smp 11, 22, and 23 (and I would guess 21 as well) causing WU failures means it does not look like I will be running the GPU client. It is not worth the electricity is uses for the 3K ppd it yields me.

may i be worthy

[H]ard|DCer of the Month - December 2010

- Joined

- Aug 17, 2010

- Messages

- 1,186

GOODBYE DREAM OF 150,000 PPD in one box.

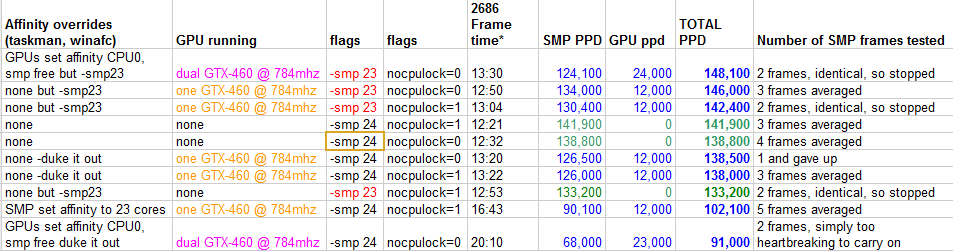

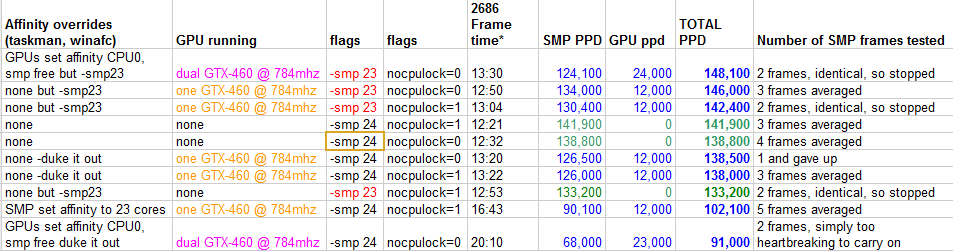

Yup, with the -smp23 flag being a standard unit killer, the game is up. Here are the results of my 5 hours of tests. This is with a captured 2686 unit, reset and then started again, so calculating the exact same frames.

I agree with you guys it is back to just SMP for me until they fix that flag issue.

Not a total loss, as when I am rendering animations etc that mean I quit bigadv for a few days I can still kick on dual GTX 460's. Now I might play a game and see what SLI can do.

Yup, with the -smp23 flag being a standard unit killer, the game is up. Here are the results of my 5 hours of tests. This is with a captured 2686 unit, reset and then started again, so calculating the exact same frames.

I agree with you guys it is back to just SMP for me until they fix that flag issue.

Not a total loss, as when I am rendering animations etc that mean I quit bigadv for a few days I can still kick on dual GTX 460's. Now I might play a game and see what SLI can do.

that's unfortunateWell, I am no further now than I was two days ago. Every combination of smp number and core priority produced unacceptable results. That, along with the reports of -smp 11, 22, and 23 (and I would guess 21 as well) causing WU failures means it does not look like I will be running the GPU client. It is not worth the electricity is uses for the 3K ppd it yields me.

I'm still hoping somebody else has a chance to run smp -15

I think I'm seeing good results so far

So, lesson being, if you have an 8 core boxen, stick your GPUs in that one

tjmagneto

[H]ard DCOTM x2

- Joined

- Aug 6, 2008

- Messages

- 3,295

So far for me -smp 11 has been working ok. Total ppd is around 75k with a 2685 smp WU and both 465s folding.

I guess this is progress???

I guess this is progress???

What do you get without the GPUs and a P2685?So far for me -smp 11 has been working ok. Total ppd is around 75k with a 2685 smp WU and both 465s folding.

I guess this is progress???

may i be worthy

[H]ard|DCer of the Month - December 2010

- Joined

- Aug 17, 2010

- Messages

- 1,186

Looks like we are stuffed. This has been broken for a while, and if they were keen on fixing it it would have been done by now. I suspect it is not an easy tweak.

It would be easier to implement a "bigadv or NOTHING please" flag and we would be fine.

It would be easier to implement a "bigadv or NOTHING please" flag and we would be fine.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)