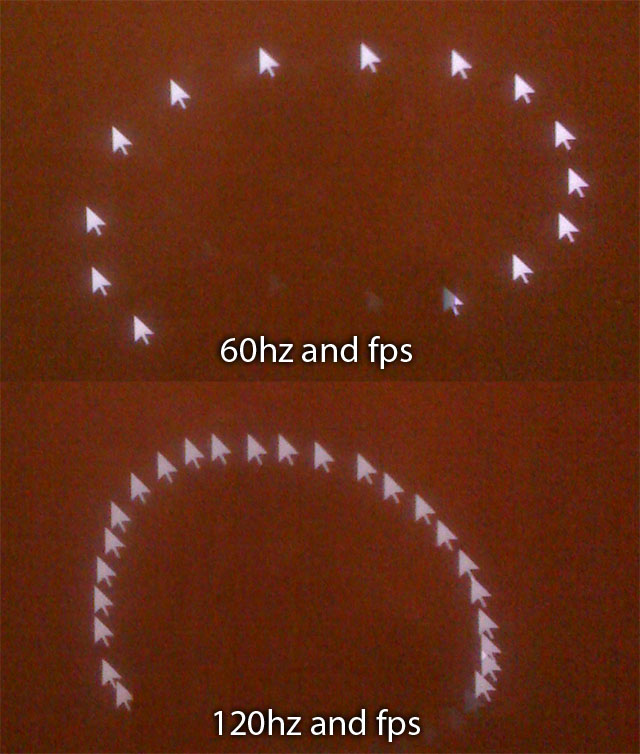

So I pitted my Acer 120hz LCD against an older LCD and a CRT, with a high-speed camera.

I have no DVI splitter cable, so this was in clone mode. Two different DVI outputs from the same video card. Cloning is known to add input lag, as is running non-native resolutions. So as a test of actual input lag this fails, as will soon become obvious. Knowing this all going in, hell its neat so I did it anyway.

Acer235hz vs old Samsung 204T

The old Samsung was not known for its responsive screen, but I was curious how this would turn out. Results: there was not always a difference, some frames were exactly the same. But when there was, it was always 33ms in the Acer's favor. Weird.

33ms difference

Exposure time here is 1/250th of a second, looks fairly normal.

Acer235hz vs MAG986FS

Next the old CRT is dragged out of a closet for this test.

Faster camera speed now...only 1/1000th of a second, 1ms. Things start getting weird looking.

If you look closely, you can see the Acer ghosting from 944 to 977. The CRT is already a frame ahead, at 38 seconds 10ms, ghosting 977 and the 7 from '37'.

Agreement!

Exposure time is now 1/8000th of a second.

So why the magic number, 33? Most likely reason is 33ms = 30FPS, so I maxing out the capability of my poorly chosen flash-based timer. On the plus side, it also means it never was 2 or more frames behind, or we would be seeing 66, 99, etc.

I will have to do a 'round two' later with a proper DVI splitter and decent timer. The larger issue on non-native res will not be resolved with this equipment. Anyone have a recommendation on timer?

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)