spectrumbx

[H]ard|Gawd

- Joined

- Apr 2, 2003

- Messages

- 1,647

My setup:

ESXi server specs:

iSCSI target:

iSCSI initiator

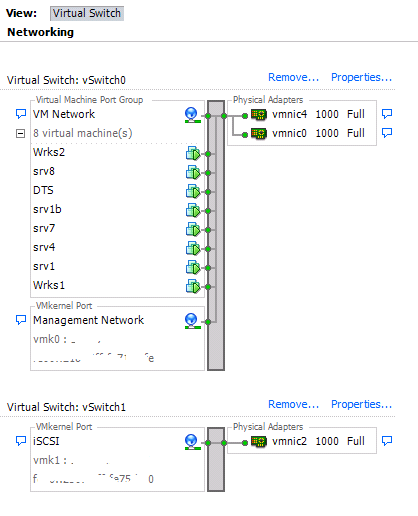

Network config:

SATA drives (used to host the iSCSI image file):

Virtual drives on iSCSI target:

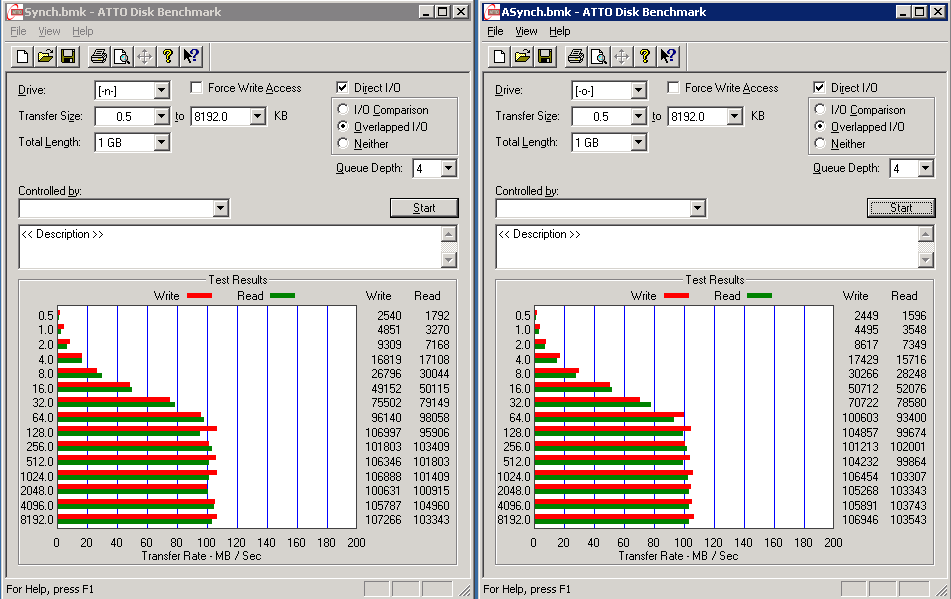

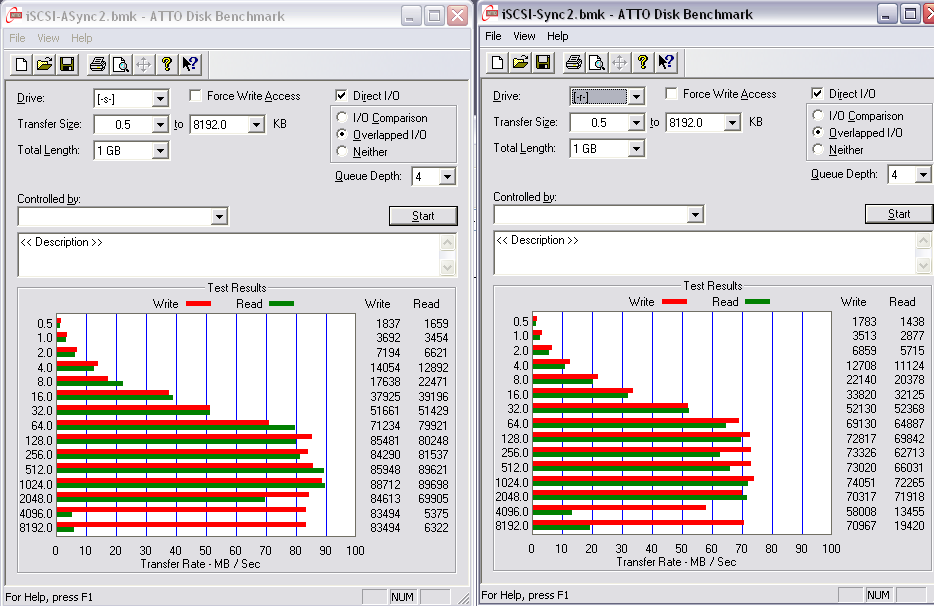

The tests for the virtual drives were conducted on a XP based VM and the OS for that VM was running off a 3rd iSCSI target.

I have repeated these tests at least 5 times each, and the results (and anomalies) are fairly consistent.

In the images, "ASync" is in reference to the iSCSI target being created with support for multi-threading (multi-threaded file system capabilities) while the one labeled "Sync" is in reference to a target created without multi-threading.

I am not sure if the differences in the benchmarks above are due to the asynchronous vs synchronous traits of the iSCSI targets or if there is a networking issue that's at play here.

Questions:

1. Why are my write speeds taking a major hit at above 2MB?

2. Should I place the VM hosting the iSCSI targets on the same switch as the ESXi iSCSI initiator? Why?

3. Can someone give me some clue/comments on this whole async/sync iSCSI target thing?

Thanks.

ESXi server specs:

- Dual Quad (2x 8356)@2.415Ghz

- 16GB (4x 4GB) DDR2 667Mhz ECC REG w/ 4bit Parity [Chipkill]

- 4x Gigabit NICs

- Intel 160GB SSD G2 (the only local datastore)

- 8+ TB storage (used by a single VM)

iSCSI target:

- Win 2003 R2 VM (512MB RAM / 2 vCPUs) running on ESXi

- SATA drives passed to the VM as physical RDMs

- The first 50GB of each drive is partitioned to host an iSCSI virtual image file

- Running Starwind (free edition)

- 2 virtual NICs (one dedicated for iSCSI) and both going to the same virtual switch (the switch has 2 NICs for load balancing)

iSCSI initiator

- ESXi with a dedicated NIC

Network config:

SATA drives (used to host the iSCSI image file):

Virtual drives on iSCSI target:

The tests for the virtual drives were conducted on a XP based VM and the OS for that VM was running off a 3rd iSCSI target.

I have repeated these tests at least 5 times each, and the results (and anomalies) are fairly consistent.

In the images, "ASync" is in reference to the iSCSI target being created with support for multi-threading (multi-threaded file system capabilities) while the one labeled "Sync" is in reference to a target created without multi-threading.

I am not sure if the differences in the benchmarks above are due to the asynchronous vs synchronous traits of the iSCSI targets or if there is a networking issue that's at play here.

Questions:

1. Why are my write speeds taking a major hit at above 2MB?

2. Should I place the VM hosting the iSCSI targets on the same switch as the ESXi iSCSI initiator? Why?

3. Can someone give me some clue/comments on this whole async/sync iSCSI target thing?

Thanks.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)